Read insights by our CEO, Mr. Saurabh Jain, about how organizations can leverage Domain-Intelligent AI to create a sustainable and high-impact talent acquisition operating model in this exclusive interview with Dataquest –

Link to article: Harnessing AI for Talent Acquisition: Saurabh Jain, Founder & CEO of Spire.AI

Artificial intelligence (AI) is rapidly transforming industries, automating tasks, and generating valuable insights. However, for businesses to leverage the full potential of AI, it’s crucial to ensure its responsible development and implementation. This is where the concept of responsible AI comes in.

What is Responsible AI?

Responsible AI refers to principles and practices guiding the design, development, deployment, and use of AI systems. It’s about building trust in AI by ensuring it’s fair, transparent, accountable, and aligned with ethical considerations.

“When implementing AI in business operations, organizations must establish clear ethical principles such as fairness, transparency, and accountability.” – Saurabh Jain, CEO, Spire.AI.

Core Principles of Responsible AI

Fairness

AI systems shouldn’t perpetuate biases or discrimination present in the data they’re trained on. This requires careful data selection during development to ensure the training data represents the real world. It’s also essential to have ongoing monitoring to identify and mitigate bias creep, which can occur as data and usage patterns evolve.

Imagine an AI system used for loan approvals that is accidentally biased against a particular demographic group due to historical biases in the data it was trained on. Responsible AI practices would ensure that such biases are identified and addressed before the system is deployed.

Transparency

Understanding how AI systems arrive at decisions is critical. Explainable AI techniques that shed light on the reasoning behind AI outputs can achieve this. Explainable AI methods can range from simple decision trees to more complex strategies that allow users to trace an AI’s decision-making process step-by-step.

“Organizations should ensure that AI system providers are committed to these ethical frameworks, providing clear explanations for the decisions made by the systems and backing the claims with data.” – Saurabh Jain, CEO, Spire.AI.

By employing explainable AI techniques, businesses can gain insights into how AI systems arrive at their conclusions, fostering trust and enabling informed decision-making.

Accountability

There should be clear ownership and responsibility for developing, deploying, and using AI systems. This ensures that any potential negative impacts can be traced and addressed. Establishing clear lines of accountability helps prevent unintended consequences and ensures that AI systems are used safely and responsibly.

Why Responsible AI Matters

In the business world, responsible AI is not just an ethical imperative; it’s a competitive advantage. Here’s why:

- Builds Trust: Trustworthy AI fosters confidence in its capabilities, leading to broader AI adoption and better organizational decision-making. Employees who understand how AI systems work and trust their outputs are more likely to use them effectively.

- Mitigates Risk: Proactive attention to responsible AI practices helps minimize legal and reputational risks associated with biased or opaque AI systems. For example, a lack of transparency in an AI-driven recruitment tool could lead to accusations of discrimination if the tool’s decision-making process is not transparent.

- Enhances ROI: Responsible AI helps businesses maximize their AI investments and avoid costly errors by ensuring fairness and transparency. Biased AI systems can lead to inaccurate results and poor decision-making, ultimately costing businesses time and money.

Responsible AI in the Enterprise

Here are some key considerations for building responsible AI in the enterprise

- Ethical Framework: Establish a clear ethical framework for AI development and deployment that is aligned with fairness, transparency, and accountability principles. This framework should be documented and communicated to all stakeholders involved in AI projects.

- Data Management: Implement robust data management practices to ensure data quality, security, and compliance with relevant regulations.

“Strict data management practices are essential to protect sensitive information, prevent misuse, and ensure regulations remain relevant and effective in a rapidly changing technological landscape.” – Saurabh Jain, CEO, Spire.AI.

Responsible data management involves collecting high-quality data, ensuring proper data security measures, and adhering to data privacy regulations.

- Monitoring and Auditing: Regularly audit AI systems to identify and address potential issues like bias or unintended consequences. This proactive approach allows for early detection and mitigation of any possible problems.

- Explainability: Invest in explainable AI techniques to explain how AI systems make decisions, enabling human oversight and informed action. Explainable AI helps build trust and transparency by allowing users to understand the reasoning behind its outputs.

- Vendor Selection: When choosing AI solutions, prioritize vendors demonstrating a commitment to responsible AI principles. Partnering with vendors who share your commitment to responsible AI ensures that your solutions align with your ethical framework.

- Continuous Improvement: Embrace a culture of constant improvement, regularly updating AI systems and practices to maintain responsible development. AI is constantly evolving, and accountable AI practices require ongoing evaluation and adaptation.

Spire.AI and Responsible AI

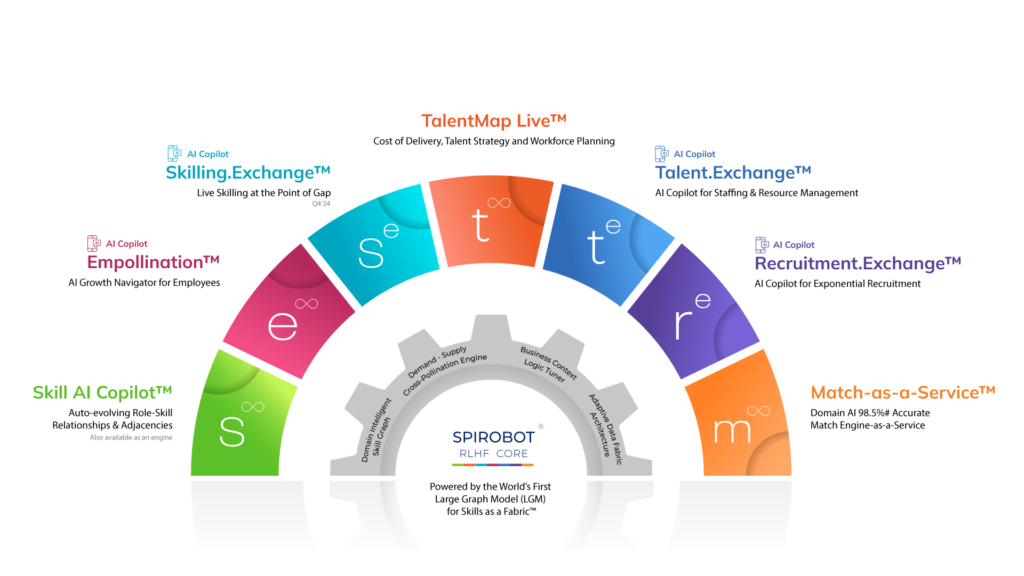

Spire.AI Copilot for Talent is committed to promoting responsible AI practices through its solutions. Here’s how Spire.AI integrates responsible AI principles:

- Transparency: Spire.AI’s talent solutions are designed to be transparent. For example, AI-generated skill profiles give employees insights into how their skills are identified and categorized. This transparency helps to build trust and understanding.

- Fairness: Spire.AI’s AI models are trained on diverse datasets to mitigate bias. Additionally, the platform considers factors beyond skills, such as experience and cultural fit, to ensure fair talent assessments.

- Explainability: Spire.AI offers features like career path recommendations with clear rationales. This allows employees to understand the basis for these suggestions and make informed decisions about their career development.

- Human Oversight: While AI plays a significant role in Spire.AI’s solutions, human oversight remains crucial. For example, HR professionals review AI-generated recommendations for talent acquisition and development, and employees and managers validate skill profiles before they are ready for use.

Building a Future with Responsible AI

By prioritizing responsible AI, businesses can unlock the full potential of this transformative technology. Trustworthy AI fosters a more equitable and ethical future for AI applications, benefiting businesses and society. However, building a future with responsible AI requires ongoing efforts from various stakeholders.

Collaboration is Key

Saurabh Jain emphasizes the importance of collaboration in his interview:

“Organizations should ensure that AI system providers are committed to these ethical frameworks… This approach will foster trust and enable informed decision-making among all talent stakeholders.” – Saurabh Jain, CEO, Spire.AI.

Achieving responsible AI necessitates collaboration between:

- Enterprises and AI developers: Enterprises should ensure that chosen AI solutions are built on responsible AI principles. Open communication with developers regarding data practices, explainability, and potential biases is crucial.

- Academia and research institutions: Continued research on explainable AI techniques, fairness metrics, and responsible AI development methodologies is essential. Collaboration between businesses and academic researchers can accelerate progress in this area.

- Policymakers and regulators: Establishing clear regulations and frameworks for responsible AI development and deployment is vital. Businesses should actively participate in discussions with policymakers to ensure rules are practical and effective.

- Civil society organizations: Civil society groups can play a vital role in raising awareness about potential risks associated with AI and advocating for ethical considerations in AI development.

The Future of Responsible AI

Looking ahead, the future of responsible AI holds promise for a more equitable and prosperous world. Here are some key areas of focus:

- Standardization of Responsible AI Practices: Developing standardized frameworks and best practices for responsible AI development will ensure consistency and facilitate broader adoption across industries.

- Academia and research institutions: Continued research on explainable AI techniques, fairness metrics, and responsible AI development methodologies is essential. Collaboration between businesses and academic researchers can accelerate progress in this area.

- Education and Awareness: Educating the public and workforce about AI capabilities, limitations, and potential risks associated with AI is crucial for building trust and ensuring responsible use of this technology.

Conclusion

Building a future with responsible AI is a collective responsibility. By working together, businesses, developers, policymakers, and the public can ensure that AI is a force for good, driving positive change across all aspects of society. As Saurabh Jain suggests, “Ethical AI development should include continuous monitoring and regular updates to their systems and practices… Organizations can prioritize responsible and unbiased AI to achieve their business objectives while maintaining ethical integrity in their AI-driven operations.” By prioritizing responsible AI principles today, we can pave the way for a brighter future powered by ethical and trustworthy AI.

Frequently Asked Questions

What is a responsible AI?

Responsible AI refers to principles and practices guiding the design, development, deployment, and use of AI systems that are fair, transparent, accountable, and aligned with ethical considerations.

What is responsible AI and AI governance?

Responsible AI and AI governance encompass the frameworks and practices for developing, deploying, and managing AI systems ethically and responsibly.